Main Body

Chapter 8 – Content Moderation and Deplatforming

Before we begin, a few quotes:

Joan Donovan, author of “Navigating the Tech Stack: When, Where and How Should We Moderate Content?”

“The wave of violence has shown technology companies that communication and coordination flow in tandem. Now that technology corporations are implicated in acts of massive violence by providing and protecting forums for hate speech, CEOs are called to stand on their ethical principles, not just their terms of service.”

Sam Harris, “My Terms of Service” on his Substack blog:

“Having abandoned the digital killing fields of Twitter/X, I know what I don’t want in an online community. This Substack should be a place for honest and useful conversation, on more or less any topic. But intentions matter.

There will be an ironclad no-a**holes policy, enforced with the apparent capriciousness of a bolt of lightning. If you ever find yourself wondering whether to say something vicious to another subscriber here, please take a moment to wonder some more. Just like in life, once you’re gone, you’re gone for good.

So don’t think of this page as another town square, where decent people can cross the street to avoid your ranting or frottage. Think of it as a dinner party, where your host seems to know more than you expected about the arms trade, how people sometimes disappear without a trace in the developing world, and where Vladimir Putin keeps his money.”

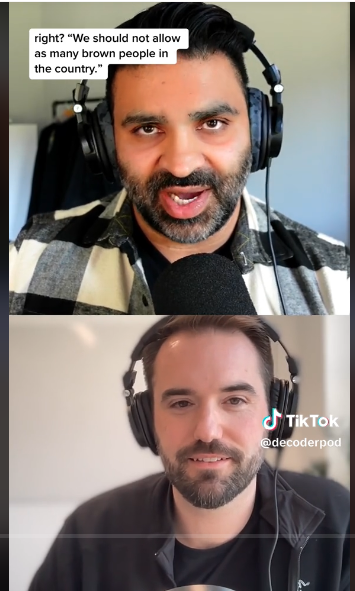

Substack CEO Chris Best defending his content moderation policy:

Click the image below to watch this 2:22 clip of Substack CEO Chris Best trying his best to respond to the question of content moderation with decoderpod host Nilay Patel.

Overview

Think about this scenario at a small scale:

You are the owner of a restaurant. A patron gets into a loud argument using foul language and aggressive behavior with another patron. It disrupts the ambiance of the restaurant to the point of distracting other patrons. A week later, the same patron comes in and starts another disruptive argument with another patron. At this point, you confront the patron and explain, “This is my restaurant where people come to enjoy a quiet meal with a companion. It’s not the place to get into arguments with other people. If there is a problem, please come to me or anyone in my staff.”

It is to no avail. The same patron comes in the following week and once again starts another disruptive argument. It is clear that other patrons are frustrated with this person and you feel it is possible you may lose customers. You decide to remove the disruptive patron and advise them that they are no longer welcome at your restaurant. You are well within your legal discretion to do this and to continue to enforce the patron’s ban, ongoing.

Now, let’s scale this up a bit…

You are the CEO of a company that provides an online community for anyone to join according to the Terms of Use and other civil protocols. There are over 10 million members in your system. One of them happens to be very prominent, has millions of followers, constantly posts hateful or misleading content, reposts ugly propaganda, and regularly trolls other members. You rely on your advertising revenue to sustain your business, so when one of your advertisers tells you that they are pulling their ads off your system unless something is done about this disruptive member, what do you do?

At first glance, the issue of whether a private company has the right to moderate content for the purpose of removing (perceived) offensive or misleading content is simply a matter of conducting business. Not even the First Amendment to the U.S. Constitution applies to common instances of content moderation since the social media companies in question are not entities of the government in the act of curtailing free speech.

However, the issue becomes more complicated when you consider that a small handful of tech companies now control the vast majority of content that people engage with – there is no equivalent “public sphere.”

For example, what happens when a municipal government entity that uses Facebook chooses to block a user because their posts were considered offensive? When then-president Donald Trump used his personal Twitter account as a forum for his political purposes, the legal question arose whether he was allowed to block users who’s views he did not like. In the latter situation, the courts stated that, even when a person could still express their viewpoints elsewhere, Trump’s inflicting a burden on speech was a violation of the First Amendment, especially since he was using private property for public use. These instances point to potential viewpoint discrimination.

This chapter of study asks: Under what conditions does content moderation and deplatforming fall into the realm of censorship? Is freedom of speech synonymous with an entitlement to freedom of reach?

Numerous legal arguments have been made to claim that social media systems, by virtue of their function and reach, constitute a “transformation” from being a private entity into a public forum (which must not discriminate against viewpoints). All of these arguments have failed in the court of law. As Justice Kavanaugh stated, “Providing some kind of forum for speech is not an activity that only governmental entities have traditionally performed. Therefore, a private entity who provides a forum for speech is not transformed by that fact alone into a state actor.”

This chapter will provide you with a range of perspectives on how content on social media systems are moderated (and by whom) and the means by which a person or entity can be deplatformed (it’s more complex than you’d imagine!).

Key Terms

Deplatforming – An action taken by a tech company to remove or constrain a person’s communication on its private, commercial system due to violation of its terms of use. The most prominent example of deplatforming took place following the 2020 presidential election. Deplatforming can also refer to a data hosting system cutting off services to a communication platform for lack of moderating content considered dangerous to the public.

First Amendment to the Constitution of the United States of America – “Congress shall make no law respecting an establishment of religion, or prohibiting the free exercise thereof; or abridging the freedom of speech, or of the press; or the right of the people peaceably to assemble, and to petition the Government for a redress of grievances.” Please note that the First Amendment applies to government actions to abridge free speech. It does not pertain to the right of private companies to censor content according to the Terms of Service policies each user agrees to upon joining. Arguments for unabridged free speech in social media as an unalienable right are often predicated on the misbelief that all speech is protected under the First Amendment when in fact it only pertains to government actions to curtail free speech. For a summary of typical legal arguments emerging from this principle, review Six Constitutional Hurdles for Platform Speech Regulation. On Twitter, there is an account under the name The First Amendment that embodies the voice of the First Amendment responding to ongoing issues as they emerge.

§ 230(c) of the Communications Decency Act: This act of Congress (1996) states, “No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.” This provision protects online platforms from liability for the content posted by its users. Review the infographic published by the Electronic Frontier Foundation, and organization dedicated to free speech issues on the Internet.

Viewpoint discrimination: “When [the government] engages in viewpoint discrimination, it is singling out a particular opinion or perspective on that subject matter for treatment unlike that given to other viewpoints.” Source: The First Amendment Encyclopedia.

Capitalism – An economic and political model that sanctions private ownership of the production of goods and services. This is relevant to this area of study because a private company can set their own Terms of Use policy within the limits of statutory rules of commerce. When a user agrees to the Terms of Use, they are bound by those terms without government recourse, provided those Terms of Use do not violate laws related to discrimination against a protected class.

What should you be focusing on?

Your objectives in this module are:

- Identify the range of methods SM systems use to moderate content.

- Examine the ethical factors involved in employing human content moderators where they are exposed to violence, cruelty, hatred, and death as a focal point of their work.

- Develop an executive position on the conditions under which a person or entity should or should not be deplatformed.

Readings & Media

Thematic narrative in this chapter

In the following readings and media, the authors will present the following themes:

- Content moderation removes violent and objectionable content from social media and the Internet.

- Content moderators are exposed to a non-stop flow of horrible content that causes psychological damage.

- Companies that facilitate online communication are making discretionary decisions to remove users or entities from their systems that they deem to impose a risk to their customers or the public.

- There are several ways that companies can deplatform a person or entity.

Required Podcast: Radiolab – “The Internet Dilemma” Reported by – Rachael Cusick, produced by – Rachael Cusick and Simon Adler, August 11, 2023. (30:00).

This podcast provides the historical and legal context for corporate liability and responsibility for the content posted on its platforms. Since social media systems are not considered publishers (like a newspaper or TV station), Section 230 protects them from any harm caused by content posted by its members.

No matter what happens, who gets hurt, or what harm is done, tech companies can’t be held responsible for the things that happen on their platforms. Section 230 affects the lives of an untold number of people like Matthew (one of the featured interviewees in this podcast), and makes the Internet a far more ominous place for all of us. But also, in a strange twist, it’s what keeps the whole thing up and running in the first place.

Required Article: WIRED Magazine – “The Laborers Who Keep D**k Pics and Beheadings Out of Your Facebook Feed WIRED” by Adrien Chen, October 3, 2014. (14 pages)

This article contains foul language and references to violent imagery (it does not show any), so please be warned in advance as you read about the tasks human content moderators perform to keep social media systems clean.

Chen, A. (2014, October 3). The Laborers Who Keep D**k Pics and Beheadings Out of Your Facebook Feed. WIRED Magazine. https://www.wired.com/2014/10/content-moderation/

Required Article: Axios.com “Twitter suffers “massive drop in revenue,” Elon Musk says” by Herb Scribner, November 4, 2022 (2 pages)

This brief summary article describes the effect on Twitter’s advertisers’ willingness to use their platform if the content moderation policy turns Twitter into a risky place to advertise their brands. While this article does not address First Amendment issues, it illuminates the more immediate concern about the content moderation policy and the success of a given business model where advertisers are the primary source of revenue.

Scribner, H. (2022, November 4). Elon Musk: Twitter suffers “massive drop in revenue” after advertisers leave. Axios. https://www.axios.com/2022/11/04/elon-musk-twitter-revenue-drop-advertisers

Required Article: “5 Types Of Content Moderation And How To Scale Using AI” by Thomas Molfetto, Clarifai, Inc., June 13, 2021 (7 pages)

This article describes the different ways that artificial intelligence (AI) can be used to moderate content without human intervention.

Deplatforming: The following media describe the legal framework through which content moderation and deplatforming is viewed, according to various perspectives and legal interests. In the Supplemental resources, you will find an article that describes the various ways that a person or entity can be deplatformed according to the layers of service in the Internet architecture.

Required Article: “Normalizing De-Platforming: The Right Not to Tolerate the Intolerant” by Robert Sprague, Professor of Legal Studies in Business, University of Wyoming College of Business Department of Management & Marketing, September 1, 2021. (30 pages – skim)

This article describes the legal boundaries within which online platforms operate. It traces the origins of the § 230(c) of the Communications Decency Act and the various legal cases that have been tried to moderate online content and control users’ access to Internet platforms.

There are details provided for specific legal cases which you can skim if you like. However, your focus of interest should be about how corporate interests interpret their roles, obligations, and responsibility for user generated content:

- Where is the line of responsibility when user generated content causes harm while using the tools provided to them by a platform?

- At what point, if at all, does the state (our government) assert an interest in sustaining free speech on a privately owned business’s property? Wouldn’t this be considered a Socialist intervention?

- Under what conditions is a corporate action to deplatform a user considered viewpoint discrimination?

- How strong is the argument that the lack of a comparable alternative to publish speech amounts to a constraint of free speech if a person is deplatformed from a global publishing platform?

Required Video: Al Jazeera English – “Trump-free Twitter: The debate over deplatforming | The Listening Post”

It is difficult to find objective unbiased reporting on the issue of deplatforming since the majority of those who have been subject to it have been predominantly right-wing, politically. The program below is produced by Al Jazeera, a global news agency that often frames issues from a perspective other than a strictly western/American point of view.

View only the first 12 minutes of this video (the rest of it is related to different topics). Consider the following as you watch:

- What does being “silenced” really mean, from a Constitutional perspective? Does being deplatformed prevent anyone from actually speaking, or are there special conditions to the exercise of free speech today that the Founding Fathers could not have anticipated?

- Does government intervention into controlling a private company’s platforming decisions deviate from our traditional capitalist laissez-faire economic model into the realm of state controlled socialism?

Optional: Supplemental resources that are relevant to content moderation

The Intercept – Facebook tells moderators to allow graphic images of Russian airstrikes but censors Israeli attacks – Internal memos show Meta deemed attacks on Ukrainian civilians “newsworthy” — prompting claims of a double standard among Palestine advocates.

NYU Stern School – Center for Business and Human Rights – “Who Moderates the Social Media Giants? A Call to End Outsourcing” by Paul M. Barrett, June, 2020. This is a comprehensive study on Facebook’s content moderation policy, the outsourcing strategy used to implement it, and the effects of this arrangement. Eight recommendations are offered for social media companies to implement. This would be a useful study for Management students in responding to the potentially harmful consequences of business operations.

Centre for International Governance Innovation – “Navigating the Tech Stack: When, Where and How Should We Moderate Content?” by Joan Donovan, Centre for International Governance Innovation, October 28, 2019. This article describes how content moderations and deplatforming occurs at each level of the Internet’s service structure.

The New York Times – “The Silent Partner Cleaning Up Facebook for $500 Million a Year” By Adam Satariano and Mike Isaac. Published Aug. 31, 2021, updated Oct. 28, 2021. This article traces the historic efforts by Facebook to outsource content moderation to a third-party provider, Accenture, and how they realized they needed to expand their resources to keep up with the flood of disturbing content being published all day, everyday.